Ferg's Finds

This is a short weekly email that covers a few things I’ve found interesting during the week.

Article(s)

OPEC 2024 World Oil Outlook 2050 (some great graphs painting a very different picture to IEAs Net-Zero forecasts).

Comparative Analysis of Monthly Reports on the Oil Market

The big difference between OPEC and IEA being non-OECD demand growth forecasts.

Podcast/Video

Matt never disappoints with his coal insights: Matt Warder talks coal markets: how does coal morph into AI play, election, $BTU, $CEIX $ARCH merger

Quote

“The most satisfying form of freedom is not a life without responsibilities, but a life where you are free to choose your responsibilities.”

- James Clear

Tweet

There will be a heavy price for pretending these projects were economic, namely inflation.

Charts

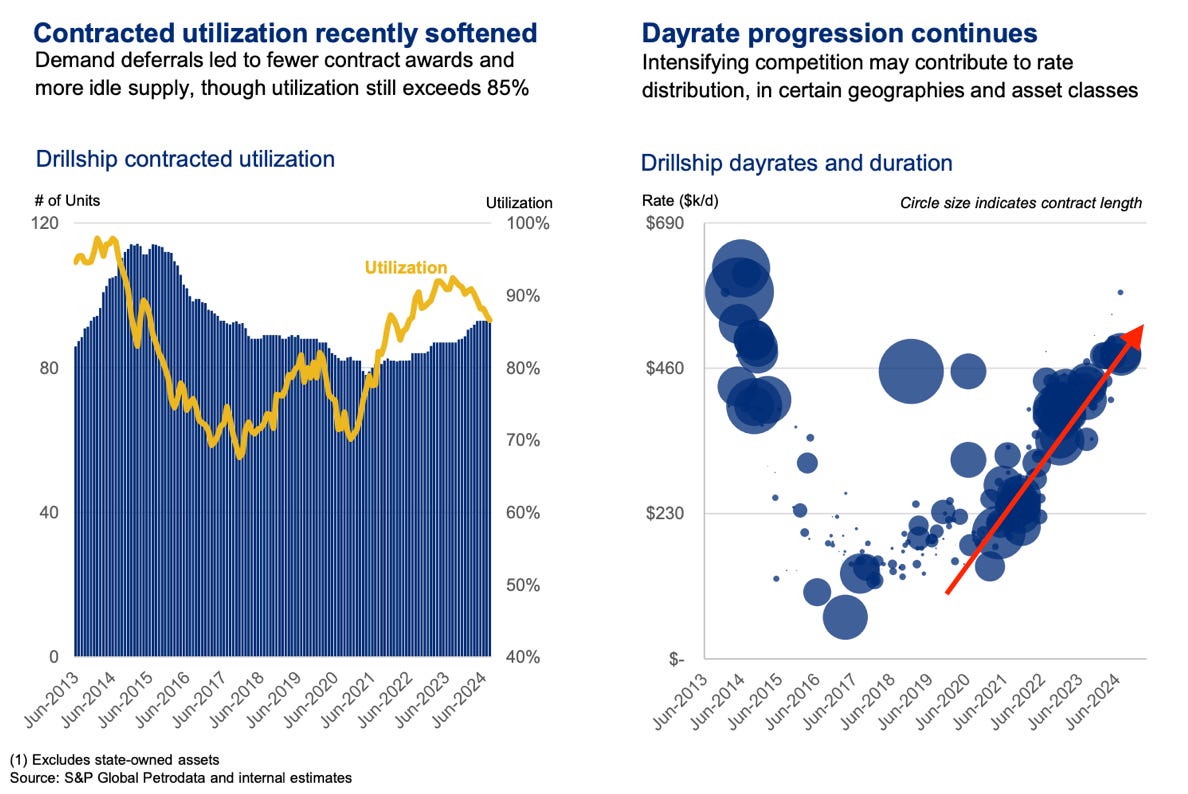

While utilisation increases have softened recently the trend is clear. There are still 12 cold stacked or undelivered drillships, which, once activated, the real party starts.

Something I'm Pondering

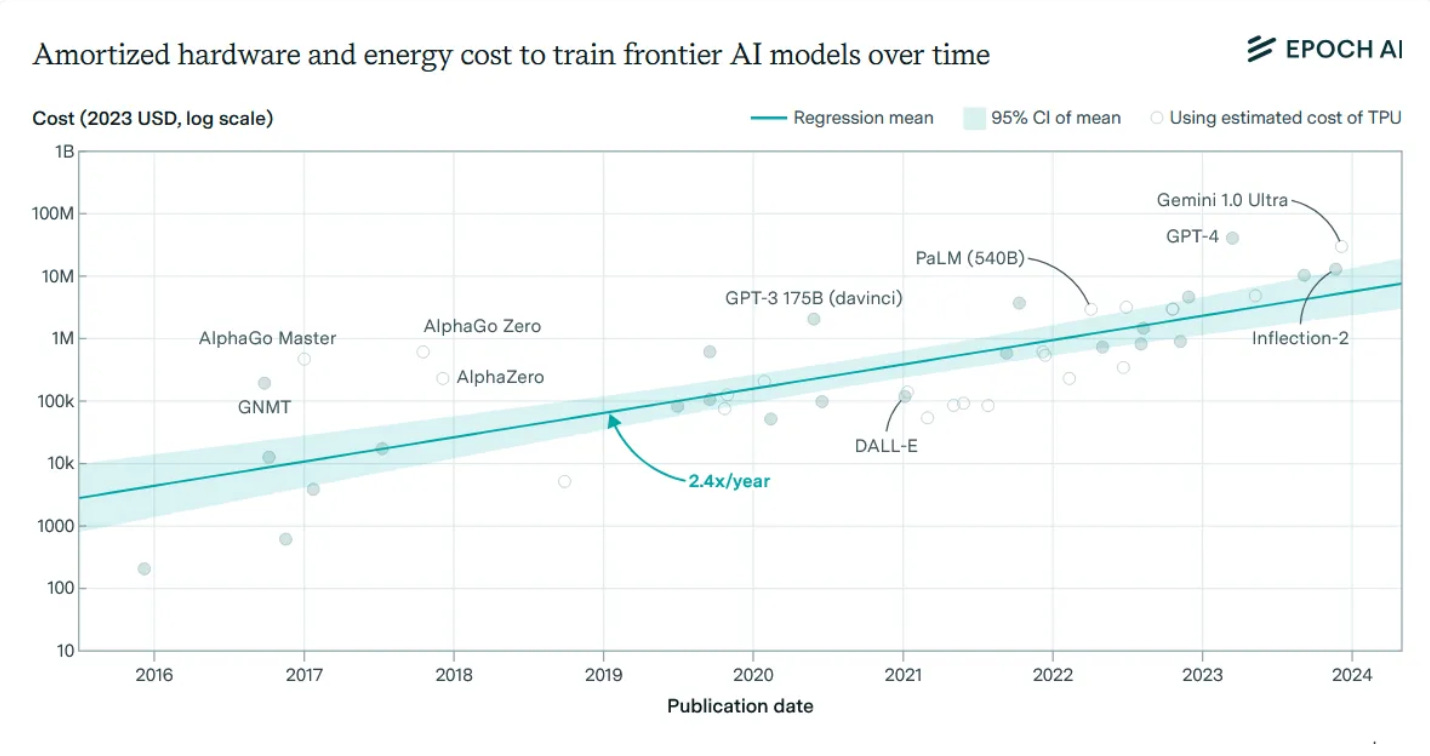

I'm pondering how much money is going to be thrown at winning this AI race and how access to quality baseload power is the limiting factor.

This was a insightful read: Scaling: The State of Play in AI

So, for simplicity’s sake, let me propose the following very rough labels for the frontier models. Note that these generation labels are my own simplified categorization to help illustrate the progression of model capabilities, not official industry terminology:

-Gen1 Models (2022): These are models with the capability of ChatGPT-3.5, the OpenAI model that kicked off the Generative AI whirlwind. They require less than 10^25 FLOPs of compute and typically cost $10M or under to train. There are many Gen1 models, including open-source versions.

-Gen2 Models (2023-2024): These are models with the capability of GPT-4, the first model of its class. They require roughly between 10^25 and 10^26 FLOPs of compute and might cost $100M or more to train. There are now multiple Gen2 models.

-Gen3 Models (2025?-2026?): As of now, there are no Gen3 models in the wild, but we know that a number of them are planned for release soon, including GPT-5 and Grok 3. They require between 10^26 and 10^27 FLOPs of compute and a billion dollars (or more) to train.

-Gen4 Models, and beyond: We will likely see Gen4 models in a couple of years, and they may cost over $10B to train. Few insiders I have spoken to expect the benefits of scaling to end before Gen4, at a minimum. Beyond that, it may be possible that scaling could increase a full 1,000 times beyond Gen3 by the end of the decade, but it isn’t clear. This is why there is so much discussion about how to get the energy and data needed to power future models.

I mean the numbers quickly get nuts, take the below example:

Joe Dominguez, CEO of Constellation Energy Corp., said he has heard that Altman is talking about building 5 to 7 data centers that are each 5 gigawatts.

7 data centres at 5GW is 36% of the entire US Nuclear fleets output! (94 reactors putting out 97GW).

It's ironic that funds were poured into renewables and ESG-approved tech companies while capital-starving baseload generation (coal, gas, and nuclear), which is now exactly what they need to scale AI.

I hope you’re all having a great week.

Cheers,

Ferg

P.S. I now have a directory for all my articles (free and paid) and a full overview of my portfolio position by position here.

Or if you’re interested in my story and why I started this Substack, you can read the story here.

I just had a thought — where will all the dollars once flowing to Russian assets go if commodity prices rise?